반응형

* 환경 : Ubuntu 20.04

* Deploy 서버 1대

* Ceph 서버 3대

* CEPH VERSION : Octopus

0. 초기 설정 (ssh-keygen 설정)

$ ssh-keygen

$ ssh-copy-id deploy

$ ssh-copy-id ceph-1

$ ssh-copy-id ceph-2

$ ssh-copy-id ceph-3

1. Ansible 패키지 설치, ceph-ansible 패키지 다운로드

$ apt update;apt -y install python3-pip

$ apt-get install git

$ apt-get install ansible

$ apt-get install sshpass

$ git clone https://github.com/ceph/ceph-ansible.git; cd ceph-ansible/

$ git checkout stable-5.0

Branch 'stable-5.0' set up to track remote branch 'stable-5.0' from 'origin'.

Switched to a new branch 'stable-5.0'

$ git status On branch stable-5.0

Your branch is up to date with 'origin/stable-5.0'.

stable 관련된 내용 (Ceph 버전과 Ansible 버전)

stable-3.0 Supports Ceph versions jewel and luminous. This branch requires Ansible version 2.4.

stable-3.1 Supports Ceph versions luminous and mimic. This branch requires Ansible version 2.4.

stable-3.2 Supports Ceph versions luminous and mimic. This branch requires Ansible version 2.6.

stable-4.0 Supports Ceph version nautilus. This branch requires Ansible version 2.9.

stable-5.0 Supports Ceph version octopus. This branch requires Ansible version 2.9.

stable-6.0 Supports Ceph version pacific. This branch requires Ansible version 2.9.

master Supports the master branch of Ceph. This branch requires Ansible version 2.10.

$ pip3 install -r requirements.txt

$ vi /etc/ansible/hosts

[ceph]

10.101.0.3

10.101.0.16

10.101.0.21

$ ansible all -m ping -k

SSH password:

10.101.0.3 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

10.101.0.21 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

10.101.0.16 | SUCCESS => {

"ansible_facts": {

"discovered_interpreter_python": "/usr/bin/python3"

},

"changed": false,

"ping": "pong"

}

$ pip3 install requirements.txt 파일을 돌렸을 때 에러메시지 해결 방안

ImportError: No module named typing -> pip3 install typing

ModuleNotFoundError: No module named 'setuptools_rust' -> pip3 install setuptools_rust

ERROR: 'pip wheel' requires the 'wheel' package. To fix this, run: pip install wheel -> pip3 install wheel

2. ceph-ansible 설정

inventory 설정

$ cat inventory.ini

[mons]

ceph-1 ansible_host="10.101.0.3"

ceph-2 ansible_host="10.101.0.16"

ceph-3 ansible_host="10.101.0.21"

[osds:children]

mons

[mgrs:children]

mons

[monitoring:children]

mons

[clients:children]

mons

[mdss:children]

mons

[grafana-server]

deploy ansible_host="10.101.0.5"

[horizon]

deploy ansible_host="10.101.0.5"

all.yml 파일 수정

$ cat group_vars/all.yml

---

dummy:

cluster: ceph

ntp_service_enabled: true

ntp_daemon_type: chronyd

monitor_interface: ens3

public_network: "10.101.0.0/16"

cluster_network: "10.101.0.0/16"

ip_version: ipv4

osd_group_name: osds

containerized_deployment: true

ceph_docker_image: "ceph/daemon"

ceph_docker_image_tag: latest-master

ceph_docker_registry: docker.io

ceph_docker_registry_auth: false

ceph_client_docker_image: "{{ ceph_docker_image }}"

ceph_client_docker_image_tag: "{{ ceph_docker_image_tag }}"

ceph_client_docker_registry: "{{ ceph_docker_registry }}"

dashboard_enabled: True

dashboard_protocol: https

dashboard_port: 8443

dashboard_admin_user: admin

dashboard_admin_user_ro: false

dashboard_admin_password: p@ssw0rd

grafana_admin_user: admin

grafana_admin_password: admin

osds.yml 파일 수정

$ grep -vE '^#|^$' group_vars/osds.yml

---

dummy:

copy_admin_key: true

devices: - /dev/vdb

3. Playbook 실행

playbook 실행 시, 방식이 여러가지가 있으나 여기에서는 container 방식을 이용했습니다.

$ ansible-playbook -i inventory.ini site-container.yml.sample

4. 서비스 확인

root@deploy:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

cced40dba8c2 prom/prometheus:v2.7.2 "/bin/prometheus --c…" 5 weeks ago Up 5 weeks prometheus

ec8daf57e052 prom/alertmanager:v0.16.2 "/bin/alertmanager -…" 5 weeks ago Up 5 weeks alertmanager

cd4c384e0cda grafana/grafana:5.4.3 "/run.sh" 5 weeks ago Up 5 weeks grafana-server

61da4a5ea79b prom/node-exporter:v0.17.0 "/bin/node_exporter …" 5 weeks ago Up 5 weeks node-exporter

root@ceph-1:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

d3fe33d59a92 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mon-ceph-1

526ba3538049 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-osd-0

c98c0849c190 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mds-ceph-1

df9b62a9a032 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mgr-ceph-1

242c57d1a940 prom/node-exporter:v0.17.0 "/bin/node_exporter …" 15 hours ago Up 15 hours node-exporter

3ba9109fae00 ceph/daemon:latest-master "/usr/bin/ceph-crash" 15 hours ago Up 15 hours ceph-crash-ceph-1

root@ceph-2:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

40cd68a6a97b ceph/daemon:latest-master "/usr/bin/ceph-crash" 5 weeks ago Up 5 weeks ceph-crash-ceph-2

d5bb4b86b71f prom/node-exporter:v0.17.0 "/bin/node_exporter …" 5 weeks ago Up 5 weeks node-exporter

e29d6787609b ceph/daemon:latest-master "/opt/ceph-container…" 5 weeks ago Up 5 weeks ceph-mds-ceph-2

86ec284888df ceph/daemon:latest-master "/opt/ceph-container…" 5 weeks ago Up 5 weeks ceph-osd-2

622760a70847 ceph/daemon:latest-master "/opt/ceph-container…" 5 weeks ago Up 5 weeks ceph-mgr-ceph-2

efcbfe11c367 ceph/daemon:latest-master "/opt/ceph-container…" 5 weeks ago Up 5 weeks ceph-mon-ceph-2

root@ceph-3:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

2e18501f5c0c ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mon-ceph-3

d3f5b1cf8192 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-osd-1

0957fd244d36 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mgr-ceph-3

3c33fc496cf7 ceph/daemon:latest-master "/opt/ceph-container…" 15 hours ago Up 15 hours ceph-mds-ceph-3

f2ae2942d0aa ceph/daemon:latest-master "/usr/bin/ceph-crash" 15 hours ago Up 15 hours ceph-crash-ceph-3

e5ecbc002e23 prom/node-exporter:v0.17.0 "/bin/node_exporter …" 15 hours ago Up 15 hours node-exporter

root@ceph-1:~# docker exec ceph-mgr-ceph-1 ceph -s

cluster:

id: 93be09f7-678d-4ef1-9ea6-8988ba883b4a

health: HEALTH_WARN

mons are allowing insecure global_id reclaim

services:

mon: 3 daemons, quorum ceph-1,ceph-2,ceph-3 (age 15h)

mgr: ceph-3(active, since 15h), standbys: ceph-2, ceph-1

mds: 1/1 daemons up, 2 standby

osd: 3 osds: 3 up (since 15h), 3 in (since 5w)

data:

volumes: 1/1 healthy

pools: 3 pools, 192 pgs

objects: 22 objects, 3.8 KiB

usage: 1.8 GiB used, 28 GiB / 30 GiB avail

pgs: 192 active+clean

root@ceph-1:~# docker exec ceph-mgr-ceph-1 ceph mon dump

epoch 1

fsid 93be09f7-678d-4ef1-9ea6-8988ba883b4a

last_changed 2021-07-01T16:00:38.158093+0900

created 2021-07-01T16:00:38.158093+0900

min_mon_release 17 (quincy)

election_strategy: 1

0: [v2:10.101.0.4:3300/0,v1:10.101.0.4:6789/0] mon.ceph-1

1: [v2:10.101.0.7:3300/0,v1:10.101.0.7:6789/0] mon.ceph-2

2: [v2:10.101.0.25:3300/0,v1:10.101.0.25:6789/0] mon.ceph-3

dumped monmap epoch 1

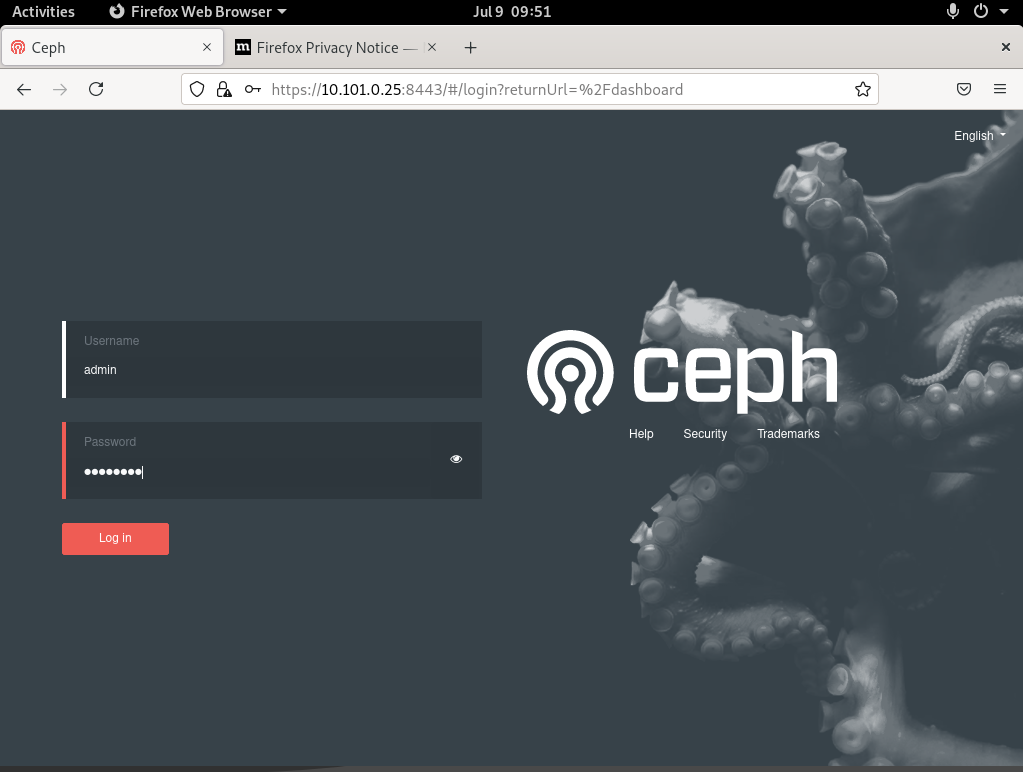

ceph mgr IP:8443 포트로 접속을 하게 되면 아래와 같이 CEPH Dashboard 주소가 나오게 됩니다.

로그인 이후에는 CEPH의 상태값을 확인할 수 있습니다.

Grafana를 통해서도 확인이 가능합니다.

Deploy서버에 Grafana와 Prometous를 설치하였기 때문에 deploy:3000 번포트로 접속하게 되면 아래와 같은 Dashboard가 뜨게되며, 상태를 확인할 수 있습니다.

반응형

'BlockStorage(Ceph)' 카테고리의 다른 글

| python-rados 연동 (0) | 2021.04.16 |

|---|---|

| ceph 1 daemons have recently crashed (0) | 2021.02.20 |

| CEPH OSD OS재설치 (0) | 2021.01.31 |

| [ERROR] ceph application not enabled on 1 pool(s) (0) | 2021.01.30 |

| [ERROR] RuntimeError: Unable to create a new OSD id (0) | 2021.01.25 |